Data Silence: How AI is learning discrimination and what FeedbackFruits is doing about it

Artificial Intelligence (AI) is here. And it does not come in peace. Actually, it does not come in any ways. Indeed, it is the carrier and recipient of whichever qualities we - its creators - give to it. From rationality to biases. Including racism and discrimination.

While it is commonly taken for granted that technology in itself is neutral, objective, and not affected by classic human biases [1], experience is proving this point of view wrong.

As AI is becoming more and more integrated in our everyday life (virtual assistants sales, for example - such as Google Home and Alexa - are expected to grow up to 1000% by 2023 [2]), we are discovering that technology is not clear from racism. On the opposite: it can contribute to it.

While we are writing, the world is shaken - once again - by a series of strong protests from the black community, addressing the systematic oppression their communities have to endure in Western society. Both studies and daily events show that AI could inadvertently lead to discrimination. Black people were recognized as gorillas by Google’s AI - the solution from the company? ban the words gorilla and chimps from the documentation [3] - racial profiled by the police, and even affected by racial biased decision in health-care towards millions of black people due to AI algorithms - black people are significantly assigned lower risk scores than equally sick white people, and were thus less likely to receive personalized care [4]. Not convinced? A report from the US in May 2020 showed that black people are wrongly flagged as more likely to reoffend than white people by the AI used in the US justice system (42% against 24%), and that a Microsoft machine learning chatbot named Tay spent several time on Twitter reading content just to start then blurting out antisemitic messages.

AI is not able to understand societal structures, or maybe they do it too well reflecting discrimination that is already happening.

All in all, AI is not able to understand societal structures, or maybe they do too well by replicating them exactly as they are: they stripe down differences, also those they should be paying attention to, and reflect discrimination already happening in society. Maybe they even strengthen them. It appears that AI is even too much neutral and because of that it is not that unbiased heaven we were hoping for. On the contrary, just as kids learn unnice things from uncareful parents, AI as well can learn from our worst attributes.

How is FeedbackFruits preparing AI for educational challenges? Our software developers answer!

Here at FeedbackFruits we take equality very seriously. Everybody has the right to receive the same possibility at education in order to thrive and achieve their full potential. According to the guidelines of the European Commission, we are working towards an internal AI for our tools that is going to be robust, incorporates human agency, respects privacy, and welcomes diversity, non-discrimination, and fairness [5].

We asked our developer Felix Akkermans and our Head of Research & Development Joost Verdoorn to explain how FeedbackFruits is going to tackle this AI issue to be sure we will provide great tools, with great AI, in all equity. For everybody.

"During the last years, AI’s have been proven not to be objective and neutral as we thought, given many cases of discrimination, biases, and replication of social injustices through their algorithms. How dangerous do you think it really is for our society?"

Joost:

"Currently, tensions in our society are quite high. This may be partly due to the self-learning algorithms deciding what we see and don’t see online, playing into our pre-existing biases and showing only content we feel comfortable with. An effect to be expected is the worsening of segregation in our society which I think is extremely dangerous and may lead to further human discrimination".

Felix:

“Hard to quantify! Unfortunately, I think there are 3 reasons to expect more failures here in the future. Machines are not a beacon of objectivity. For example, machine learning might act in unwanted ways (resulting in the infamous “garbage in, garbage out”). This leaves many decision makers vulnerable to false information, especially if their trust in AI is high.

Machine Learning might act in unwanted ways. This leaves many decision-makers vulnerable to false information.

Moreover, public understanding and government regulations notoriously lags behind due to the complex and abstract nature of such technologies. This makes it hard for a healthy pressure from laws and consumer preference to arise when needed to incentivize companies into investing in AI ethics. Finally, AI software often comes from private companies. These currently feel little need to provide transparency in the actual algorithms and incident rates, due to their internal policy or for job market related reasons. Hence, it is difficult to make wise and critical choices when we choose to employ them in our society".

"Is discrimination and AI really only a GIGO (Garbage In Garbage Out, data wise) problem? What other issues developers and policy makers should be careful about in your opinion?"

Joost:

"Even more than humans, AI algorithms are in essence association machines: they learn to associate some data with some action. The expression “garbage in, garbage out” is used to highlight that improper data will result in improper action being taken by the algorithm. Where do these faulty data come from? Maybe from mistakes made by the developers, but it may also result from the fact that those developers are not familiar with, or even biased towards, certain contexts the AI is supposed to function in, and thereby discriminate among the end-users of these systems. However, what has really been missing from the discourse on AI safety is an “embracing failure” mentality. Any agent, whether human or machine, will fail at some point. What really matters is to have the fail-safes in place that limit any impact these failures may have. If these failures cannot be mitigated properly, policy makers should block the AI interacting with the general public”.

Felix:

"I suspect actively investing in bias detection will go a long way, but won’t be a complete fix. Biases can occur in unexpected ways, so it’s hard to be confident you’ve accounted for all biases. Moreover, I suspect we’ll move to more human-in-the-loop designs whenever highly consequential decisions are made. While these safeguards may be necessary, they can also channel bias into the system again".

"How are we dealing with this issue at FeedbackFruits to avoid our present and future AI to discriminate among users?"

Joost:

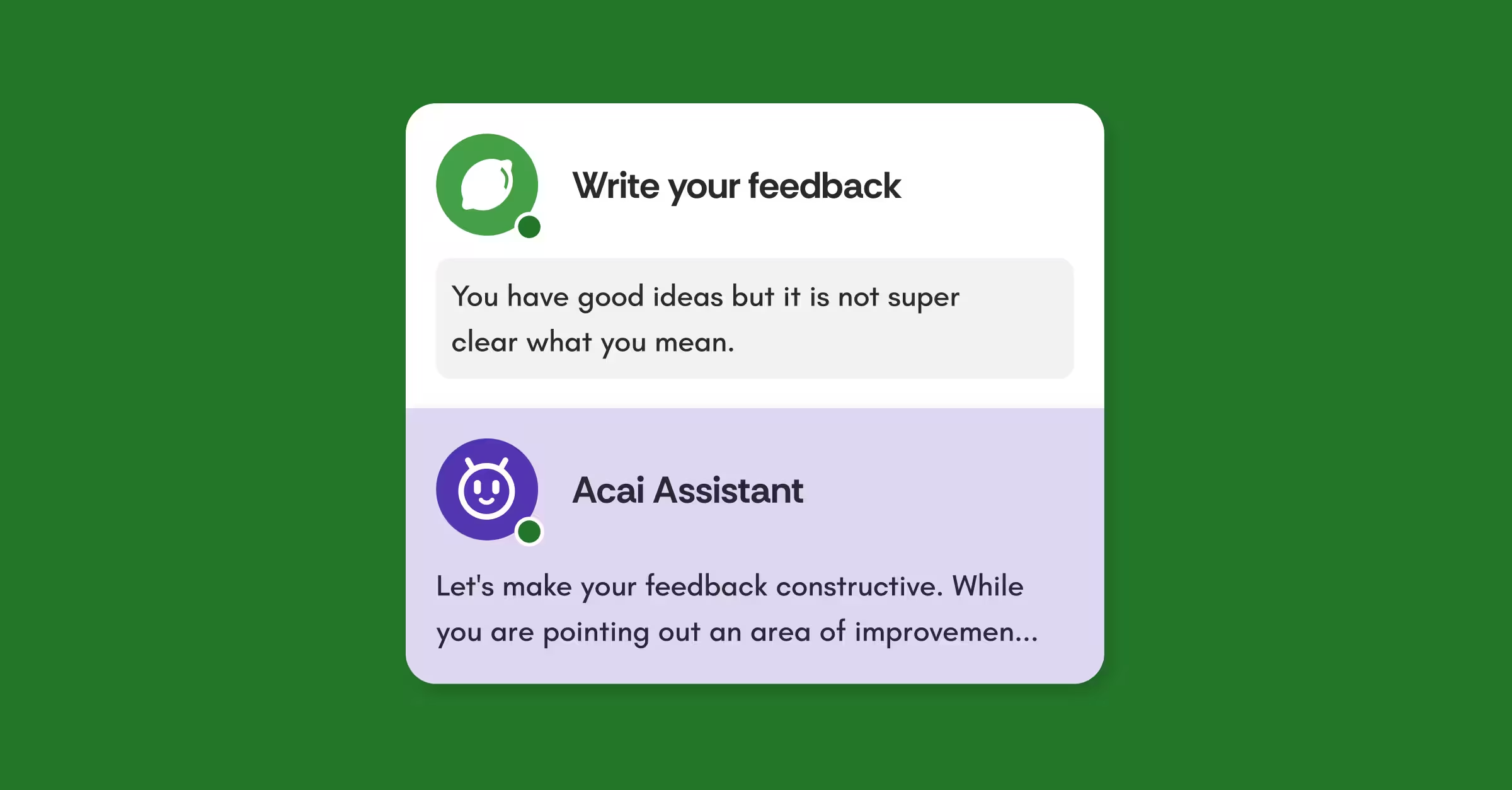

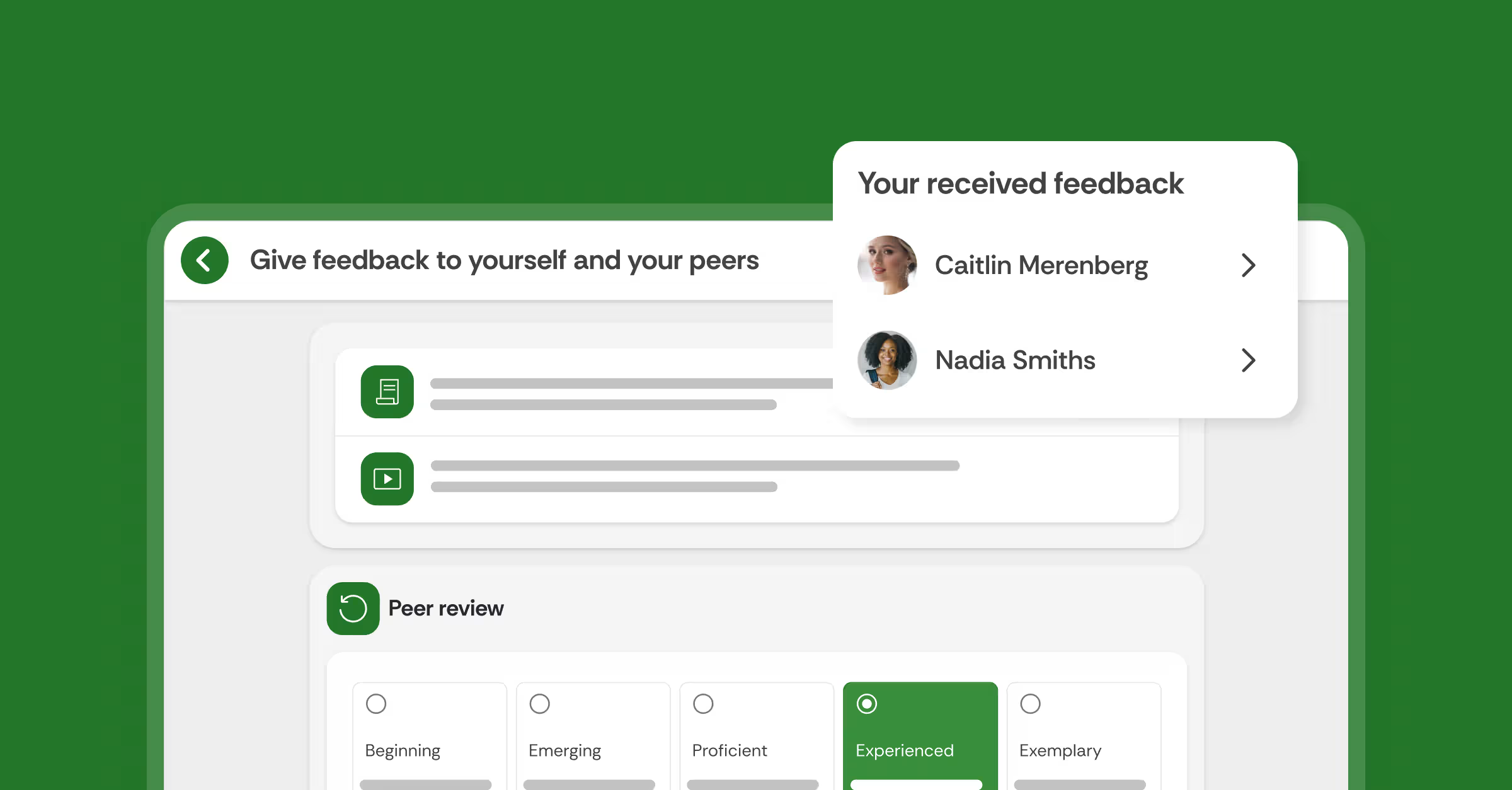

"As I mentioned earlier, we have fully embraced that our AI will make mistakes at FeedbackFruits, we are putting a large focus on making sure that these mistakes will not lead to bad outcomes. For example, we’re only using AI in the formative process (for learning), and not for grading. Furthermore, we’re fully transparent to users on which decisions were made by AI, why they were made, and users are at all times able to override such decisions. When it comes to data, we’re currently taking a closer look into our data-sourcing strategy, to make sure to attract data from a diverse range of backgrounds".

At FeedbackFruits, we want to build a strong track record through trial and error and feel confident we are well versed in putting ethics first.

Felix:

"For now, we must act with humility: we should not create or offer products that make highly consequential decisions by themselves. This would avoid the risks of carelessly venturing into, for example, AI deciding on students' grades. Instead, starting with formative feedback gives us time to safely gain experience and develop all the systems we feel are necessary for ethical use. At FeedbackFruits we want to build a strong track record through trial and error and feel confident we are well versed in putting ethics first. Nevertheless, there is currently an absence of regulation on AI safety. At FeedbackFruits we are worried about this. We want to accelerate development here in two ways. One is taking leadership by implementing the EU Guidelines for Trustworthy AI: we want to practice what we preach. The other is publicly being vocal about this concern and the need for regulation to develop in the AI context. We want to encourage a high standard for industry, and we are documenting this by working on our public declaration on AI in education”.

"If you could add one thought on the AI-discrimination issue, which would it be?"

Joost:

"AI is a powerful tool, and can be used both for good and for bad, either intentionally or not. As Felix already anticipated, the EU has published a set of guidelines intended to limit the harm AI can do, and either policy makers or users of AI should require developers to adhere to these guidelines. These are good guidelines and a nice starting point".

Felix:

"It would be a hopeful one. The road of ridding ourselves of our racist and discriminating tendencies saw extreme difficulties so far. Seeing some of these traits creeping into our machines may feel disheartening, but we’re talking about an entirely different “being” here. Where discrimination is hard to even quantify in humans, let alone correct, machines don’t suffer from these shortcomings.

I am hopeful we will see a magnificent acceleration of moral progress when shifting to AI, if we invest in a safe way.

Machines don’t care about political camps, don't hold emotional grudges around skin color or gender. Bias reporting algorithms won’t intentionally lie to us, and machines won’t refuse an update to fix them. I am hopeful we will be able to see a magnificent acceleration of moral progress when shifting to AI, if we invest in a safe way. I’m mostly worried that early high-profile mass-scale failures and lack of ethics regulation will lead to a forever tainted public perception, which could stifle development before it has matured to its full potential”.

Nice to read

[1] Sundar, S. S. (2020). Rise of Machine Agency: A Framework for Studying the Psychology of Human–AI Interaction (HAII). Journal of Computer-Mediated Communication. Source

[2] Source (Retrieved on 10th of June 2020)

[3] Source (Retrieved on 10th of June 2020)

[4] Source (Retrieved on 10th of June 2020)

[5] Source (Retrieved on 10th of June 2020)

![[New] Competency-Based Assessment](https://no-cache.hubspot.com/cta/default/3782716/interactive-146849337207.png)