From regulation to innovation: What the EU AI Act means for EdTech

As the EU AI Act emerges as the first legal framework for AI, it provides a unique roadmap for ethical and responsible AI use in education. This landmark legislation is poised to influence educational technology globally, making it essential for leaders around the globe to understand its impact and potential ripple effects. In this article, we aim to briefly overview the EU AI Act and how FeedbackFruits is implementing these principles, leading a future where AI strengthens trust, upholds pedagogy, and responsibly transforms education.

What is the EU AI Act?

The European Union's Artificial Intelligence Act (AI Act) is a pioneering effort to regulate artificial intelligence, balancing innovation with ethical considerations and safety. The AI Act, the first comprehensive legal framework for AI globally, seeks to position Europe at the forefront of trustworthy AI development.

The AI Act, launched in 2024, is part of the EU Commission's initiative to create “A Europe fit for the digital age” for the 2019–2024 term.

The EU AI Act, probably one of the most controversial legal frameworks, was first introduced in December 2023. After the EU Commission published the AI Act proposal in April 2021, a political agreement was reached, and the Act was published and entered into force on August 1st, 2024. It is expected to be fully applicable and enforceable by August 2nd, 2026 with some exceptions:

- Prohibitions and AI literacy obligations entered into application from 2 February 2025

- The governance rules and the obligations for general-purpose AI models become applicable on 2 August 2025

- The rules for high-risk AI systems - embedded into regulated products - have an extended transition period until 2 August 2027

What does the AI Act involve?

The AI Act adopts a risk-based regulatory approach, categorizing AI applications into four distinct risk levels:

- Unacceptable Risk: AI systems that pose a clear threat to safety, livelihoods, or fundamental rights are prohibited. This includes practices such as harmful manipulation, social scoring, and certain types of biometric surveillance.

- High Risk: AI applications that could pose serious risks to health, safety, or fundamental rights fall into this category. Examples include certain AI applications used in critical infrastructure, education, employment, and essential services like credit scoring. These systems are subject to strict requirements to ensure their safety and reliability.

- Limited Risk: AI systems with limited risks are subject to specific transparency obligations. For instance, users should be aware they are interacting with an AI system. This level concerns applications such as deepfakes, chatbots

- Minimal or No Risk: The majority of AI systems, which pose minimal or no risk, are not subject to additional regulatory requirements. These can be put on the market without any compliance requirements applied.

For more details on the AI systems classified under the framework, you can visit this website: https://artificialintelligenceact.eu/high-level-summary/

This risk-based approach is what differentiates the EU AI Act from the GDPR. Instead of defining a list of AI systems that are prohibited or high-risk, the Act offers a systematic approach to decide how much risk an AI system presents to society and individuals.

Implications for Higher Education

The implications of the EU AI Act for the education sector are profound. Education is classified under the high-risk category, which means that AI applications in this field must adhere to strict compliance requirements. This classification reflects the significant impact AI can have on students' educational trajectories and their future careers.

High-Risk AI Applications in Education

Within the education sector, several applications fall under the high-risk category:

- AI systems that determine access to educational opportunities, such as admissions decisions.

- AI tools that evaluate learning outcomes, including grading systems.

- Monitoring systems that detect prohibited behavior during assessments.

These applications must comply with rigorous safety and documentation standards to mitigate risks. For instance, any AI system used for grading must ensure accuracy and human oversight to maintain fairness and transparency.

Prohibited Practices in Education

One of the most notable aspects of the EU AI Act is the prohibition of emotion inference systems in educational contexts. This includes any AI applications that attempt to interpret students' emotions through biometric data. The rationale behind this prohibition is to protect students' fundamental rights and prevent manipulative practices that could adversely affect their educational experience.

How can we continue to protect the fundamental rights and freedoms of individuals while implementing innovative technologies to improve teaching and learning?

FeedbackFruits design principles for the ethical use of AI

At FeedbackFruits, we are committed to developing AI tools that align with the ethical standards set forth by the EU AI Act. To achieve this, we have established a framework of guiding principles that govern our AI applications. These principles are designed to safeguard the interests of three key stakeholders: students, teachers, and institutional administrators.

Core Design Principles

Our design principles focus on the following key areas:

- Pedagogy Before Technology: Our approach emphasizes that educational pedagogy should guide the development and application of AI tools, ensuring that technology enhances teaching and learning rather than dictating it.

- Transparency: We prioritize clear communication about how AI operates within our tools, allowing users to understand its role in their educational experience.

- Human Oversight: Our systems are designed to include human oversight at critical decision points, ensuring that educators retain control over assessments and feedback.

- Right to Object: Students have the opportunity to challenge AI-generated outcomes, promoting accountability and trust in the system.

- Data Protection: We uphold stringent data protection standards, ensuring that student information is anonymized and not used without consent.

Following these principles, we map our products and features into a risk classification, which is internally performed by breaking risk assessment down into 3 criteria:

- Pedagogical risk: High stakes (e.g. summative) vs. low stakes (formative) use cases

- Technical risk: How do we foresee error rates, false positive/ negative rates, and such to expect the reliability of the technology

- Exposure risk: We take into account risk multipliers like expected users, and whether features can block the critical path of the user journey

Below are some examples of how we apply this design principles in designing our product:

AI-powered formative academic writing feedback on student submissions

A feature of our AI solution, Acai, can generate instant, formative feedback on technical aspects of students’ academic writing submissions (e.g., grammar, vocabulary, citation, structure, etc.). Based on the pedagogy-first principle, this feature is low-risk since students can choose to follow or ignore the feedback without any consequences. We also adopt the transparency and right-to-object principle by clearly explaining the feature and allowing students to opt out of receiving feedback.

In addition, feedback generated by Acai is accompanied by explanations and options for students to object to the given feedback, reflecting the interpretability and right-to-object principle. Not only are students able to understand why a comment was made on their work, but they are also given the freedom to assess the feedback and choose their own actions.

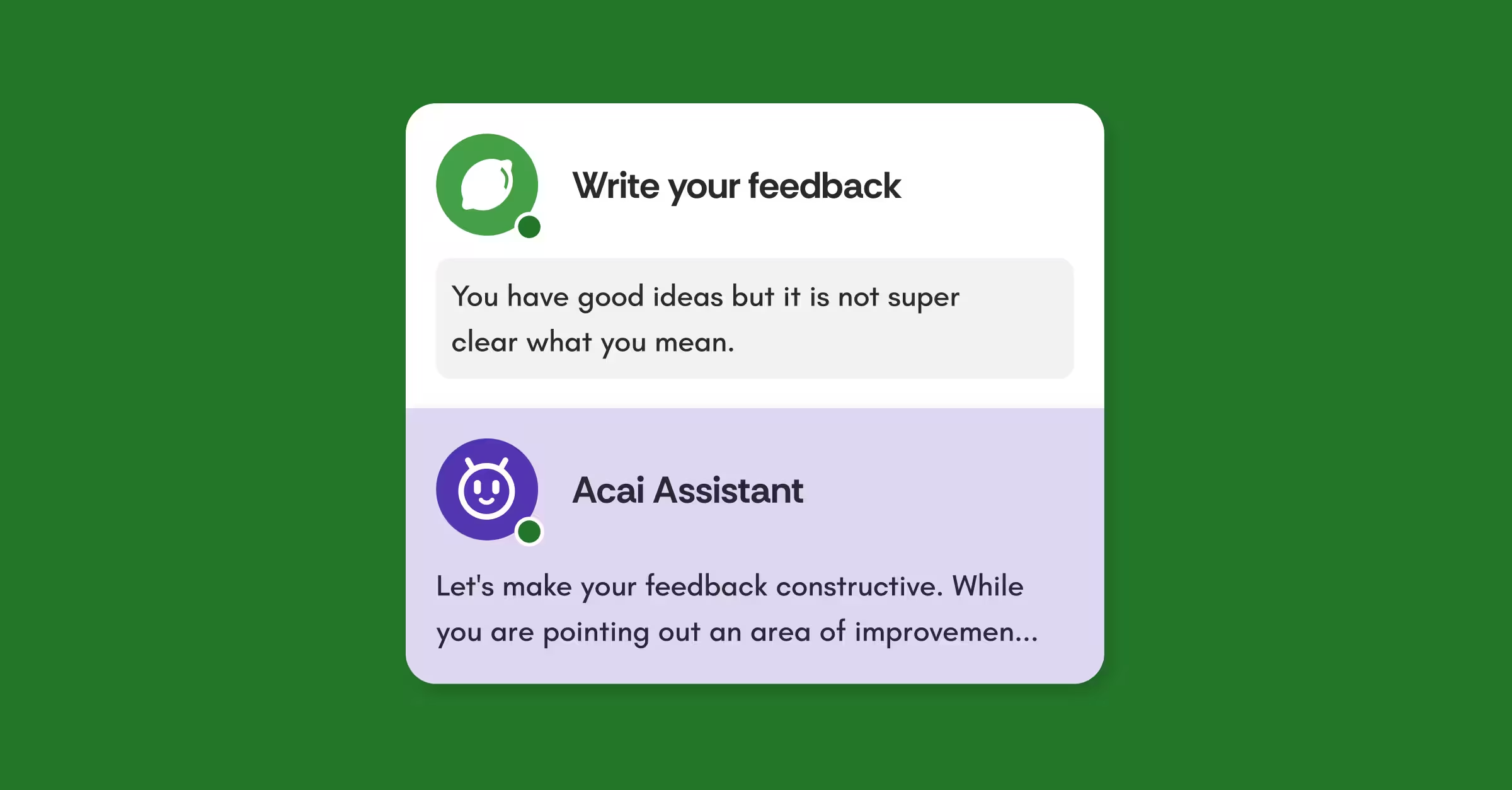

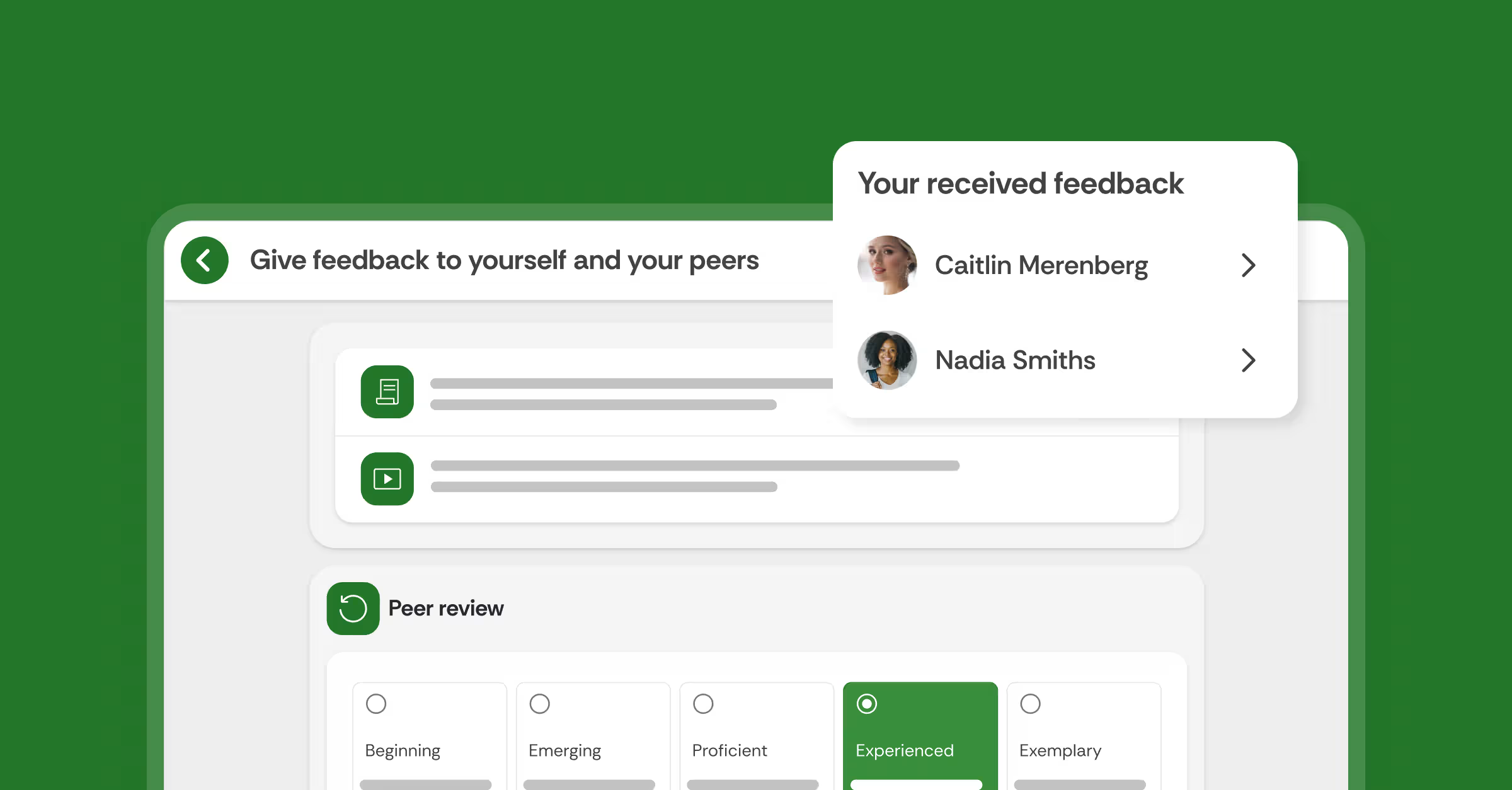

AI Feedback Coach to guide students in feedback writing

Automated Feedback Coach, another feature of Acai, helps guide students throughout their feedback process by giving timely advice and suggestions.

For this feature, we aim to ensure transparency from the beginning with clear explanations of the function and how student data will be used. Teachers can choose whether they want to enable the feedback coach or not, which reflects the right-to-object principle. To safeguard data privacy, we openly announce that student data are not used to train the language model.

Read more about our Transparency Note.

Low-stakes grading of student discussion contributions

Our latest addition to Acai is the Grading Assistant feature, which provides suggestions on feedback and grading of students’ work. This aims to help teachers deliver faster, more consistent feedback at scale. Perceiving this to be a high-risk development, we aim to minimize the pitfalls by adhering to our principles: being transparent about AI being the driver of the feature, letting teachers choose to use the auto-grading or not, and ensuring data privacy.

When using the feature, a clear explanation for each suggestion is provided with the option for the teacher to object to the AI prompt, reflecting the interpretability and right-to-object principle.

FeedbackFruits' Commitment to Ethical AI

As we navigate the complexities of the EU AI Act, FeedbackFruits is dedicated to implementing these principles in our product development. We believe that AI can transform education positively, but it must be done responsibly. By adhering to the guidelines established by the Act, we aim to foster an environment where AI supports educators and students alike.

We recognize that the landscape of AI in education is continually evolving. To stay ahead, we actively seek feedback from educators and stakeholders to refine our practices and ensure our tools serve their intended purpose. This collaborative approach allows us to adapt to new challenges and maintain compliance with emerging regulations.

We also officially signed the AI Pact Voluntary Pledges, reinforcing our commitment to ethical AI development and deployment in compliance with the forthcoming EU AI Act. Read more in our press release.

Conclusion

The EU AI Act represents a critical step towards establishing a framework for responsible AI use in education. By understanding its implications and committing to ethical practices, EdTech providers and educational institutions can pave the way for a future where AI strengthens trust, upholds pedagogy, and transforms teaching and learning in responsible and meaningful ways.

FeedbackFruits' ethical AI framework serves as a roadmap for this journey, demonstrating how a proactive, stakeholder-centric approach can unlock the full potential of AI while mitigating the risks. As the education landscape continues to evolve, the lessons learned and the principles established by FeedbackFruits can serve as a guiding light for the entire EdTech ecosystem, ensuring that the integration of AI remains firmly grounded in the values of transparency, interpretability, and responsible innovation.

Join the Conversation

We encourage you to share your thoughts on the EU AI Act and its impact on education. How do you see AI transforming your educational practices? What concerns do you have regarding its implementation? Let’s work together to create a responsible future for AI in education.

![[New] Competency-Based Assessment](https://no-cache.hubspot.com/cta/default/3782716/interactive-146849337207.png)