How to use AI in Feedback and Assessment without losing academic integrity

AI can improve feedback quality and reduce workload, but only when it’s used with structure and oversight. Here’s how institutions can adopt AI responsibly without compromising academic integrity.

.png)

How to use AI in Feedback and Assessment without losing academic integrity

AI is showing up everywhere in higher education, and feedback and assessment are right at the centre of it.

Some institutions are excited. Others are cautious. Most are a bit of both.

Because the opportunity is real: AI can help educators reduce repetitive workload, give students clearer guidance, and scale feedback across large cohorts.

But so are the risks: trust, standards, transparency, and academic integrity.

And here’s the key point:

AI in feedback and assessment is not a technical feature. It’s a design and governance challenge.

When AI is used without structure, it creates uncertainty. When it’s embedded in the right workflows, it can strengthen learning while keeping educators fully in control.

This article breaks down how to approach AI in feedback and assessment responsibly, with academic integrity protected from the start.

The problem isn’t AI. It’s unstructured AI.

Right now, the most common AI scenario looks like this:

Students use AI because it’s available.

Educators react because they have to.

Policies get written quickly.

Everyone is trying their best, but the rules feel unclear.

That’s where risk grows.

Not because AI is “bad,” but because it’s being used outside a shared framework. No consistent expectations. No common workflow. No clear role for human oversight.

That’s why guidance like UNESCO’s recommendations on generative AI in education focuses so strongly on transparency, ethics, and governance.

In short: AI needs structure.

What responsible AI in assessment actually means

When people say ethical AI in assessment, they usually mean one thing:

“We want the benefits, without losing control.”

That’s a good starting point. And it can be translated into a few practical principles.

Educators stay in control

AI can support decisions, but it should never make them. Educators remain responsible for standards, feedback quality, and final judgement.

Students stay accountable

AI should help students learn, practise, and reflect. It should not become a shortcut that replaces thinking.

Transparency stays visible

Students should know when AI is part of the process, what it’s doing, and what it’s not doing.

Governance stays intentional

AI needs policies and workflows that protect fairness, integrity, and consistency across departments, not just individual courses.

This is the foundation of trustworthy AI in education: human oversight, clarity, and responsible use.

Why unstructured AI use increases integrity risks

Academic integrity concerns are not just about cheating.

They’re also about assessment quality, fairness, and trust.

When AI use is unstructured, institutions can run into issues like:

- students submitting work they did not truly produce

- educators receiving polished but shallow responses

- inconsistent “AI rules” across courses and departments

- unclear boundaries for what’s allowed and what’s not

- more time spent investigating rather than teaching

This is where assessment integrity and AI become deeply connected. Without shared workflows and governance, the experience becomes inconsistent and hard to defend.

That’s why the real question is not “Should we allow AI?”

It’s: How do we use AI in a way that strengthens learning and protects standards?

AI works best when it’s part of the workflow

The strongest institutional approaches to AI are not feature first.

They are workflow first.

Instead of adding AI on top of existing assessment chaos, they design clear processes where AI has a defined role.

This is what responsible AI assisted assessment in higher education looks like:

- structured criteria

- clear expectations for students

- consistent workflows across courses

- human oversight at every step

- visibility for leadership and governance teams

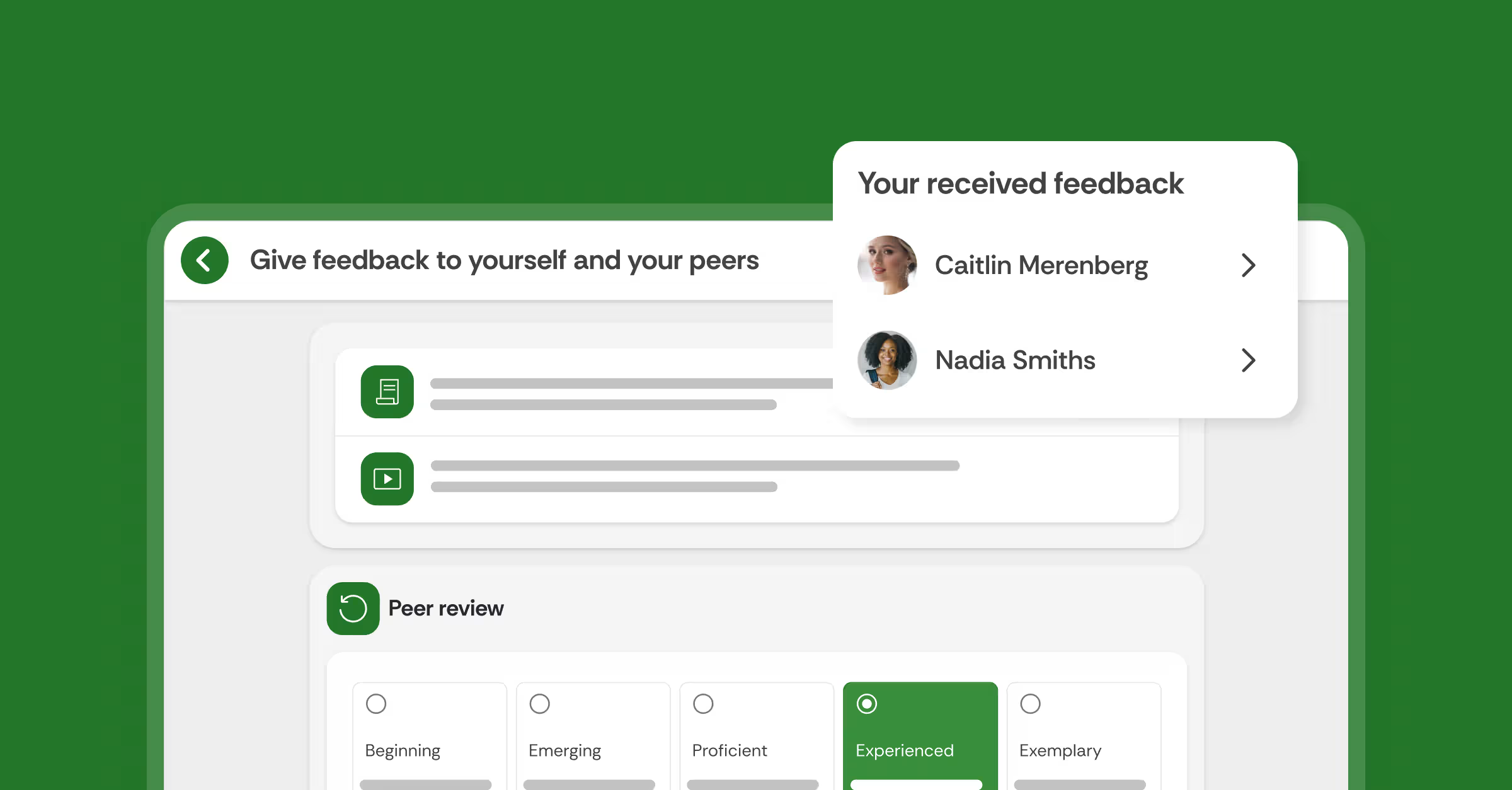

This is also why institutions benefit from a structured, LMS integrated system like the Feedback and Assessment solution: it gives AI a place to support learning without taking over the process.

What AI should actually do in feedback and assessment

AI becomes genuinely useful when it supports the parts of assessment that are hard to scale manually.

Here are a few high impact examples of AI assisted feedback done well.

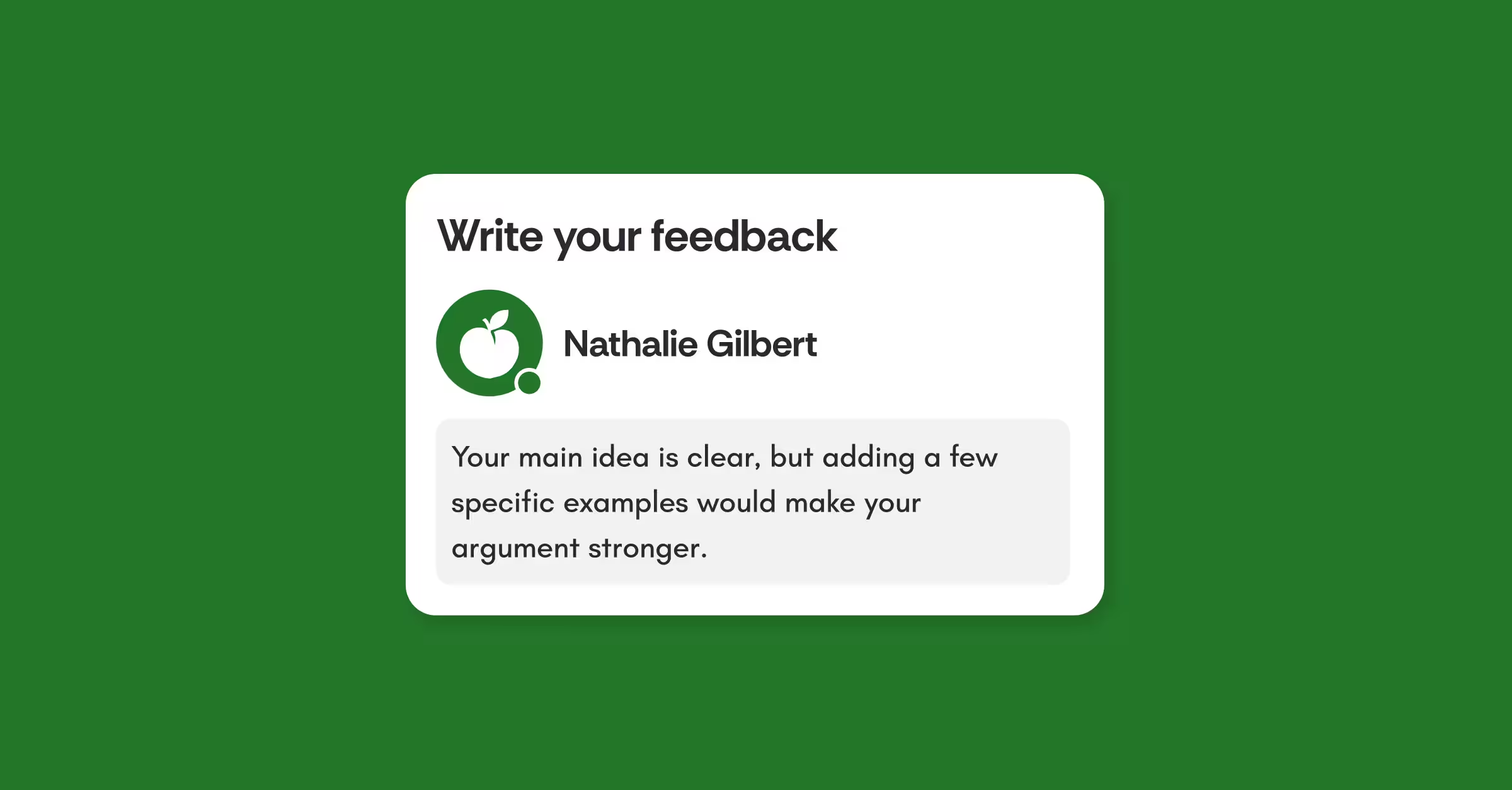

1) Help educators respond faster, without losing quality

When cohorts are large, educators often face a painful trade off: speed or depth.

This is where ACAI, our AI co assistant, can help: supporting educators with faster feedback drafting and more consistent language, while keeping judgement and standards fully human led.

The goal is not automated grading. It’s better feedback workflows, at scale.

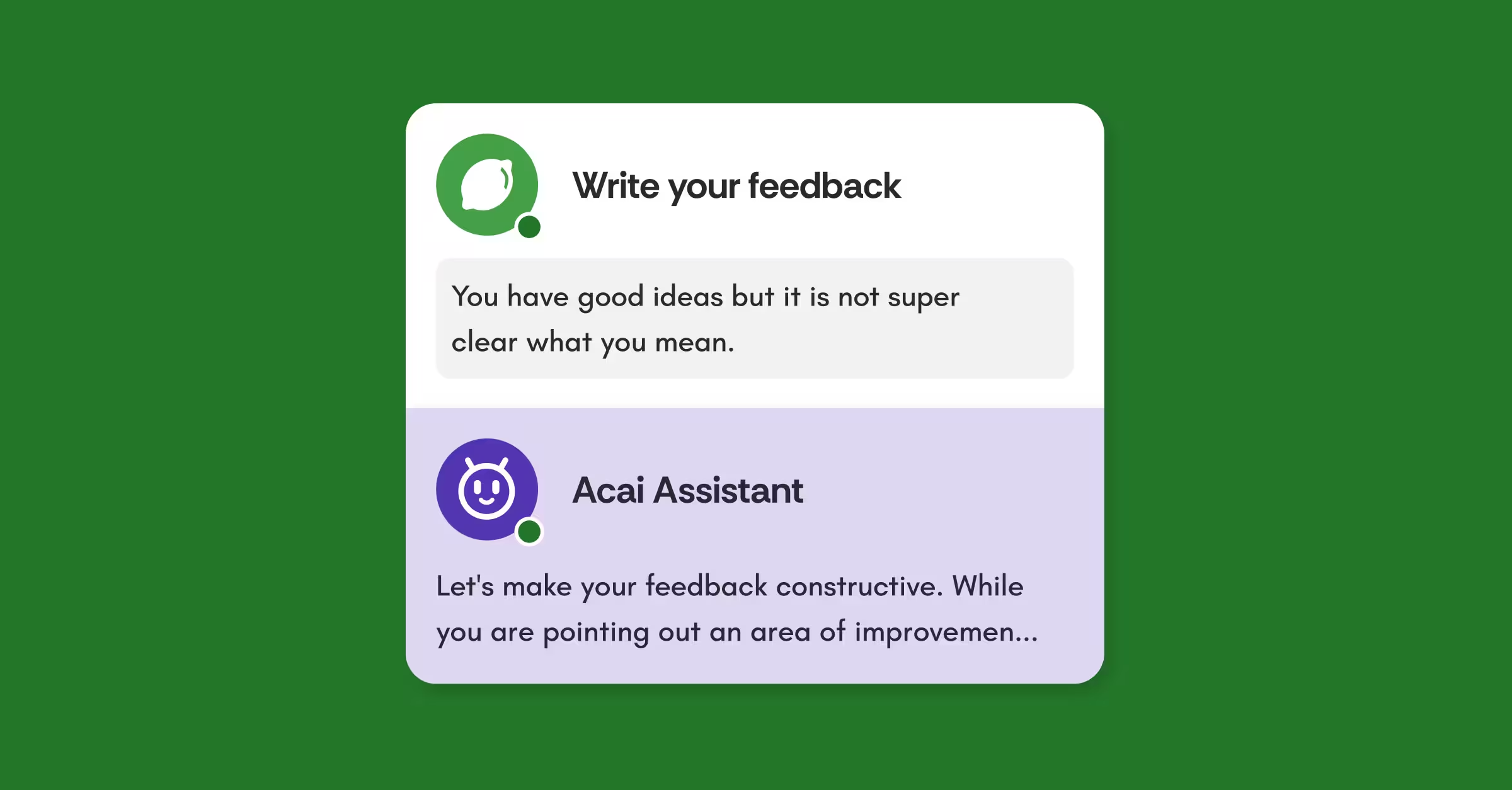

2) Help students practise before they submit

One of the best ways to protect academic integrity is to reduce last minute panic.

When students have space to practise, reflect, and improve before submission, the pressure to “outsource” thinking drops.

That’s why AI Practice matters. It supports students in building confidence and feedback literacy before the final assessment moment.

3) Support consistency across teaching teams

At institutional scale, consistency is one of the hardest things to maintain.

AI can support clearer, more aligned feedback patterns across multiple markers, while educators stay in control of final decisions and course standards.

This is where ethical AI in assessment becomes practical: it improves reliability without removing accountability.

What responsible AI looks like (in real university terms)

A good AI approach in assessment should feel like this:

- educators know what AI is doing, and can override it anytime

- students know what’s allowed, and why

- AI supports reflection and learning, not shortcuts

- workflows stay consistent across departments

- leadership can stand behind the approach confidently

That’s also why governance matters. It’s not about slowing innovation. It’s about making innovation sustainable.

The key message: AI needs a system, not improvisation

Institutions do not need random AI usage across courses.

They need a structured, LMS integrated feedback and assessment system that supports responsible AI use with educator oversight.

This is where the Feedback and Assessment solution becomes more than a tool.

It becomes the structure that makes AI safe to adopt and easier to manage at scale, while improving consistency, workload, and learning impact.

Conclusion: Academic integrity is protected through design

AI is not going away. And it shouldn’t.

Used well, it can help educators scale feedback and support student learning more effectively.

But the institutions that succeed will be the ones that treat AI as a design and governance challenge, not a feature.

Because when AI is unstructured, risk grows.

When AI is embedded in a clear workflow with human oversight, integrity stays protected and learning stays at the centre.

That’s what responsible AI in feedback and assessment should look like.

Explore how AI supports feedback and assessment responsibly

If your institution wants to adopt AI without losing trust, standards, or educator control, the next step is seeing what structured AI support looks like in practice.

Explore how AI supports feedback and assessment responsibly with the Feedback and Assessment solution.

![[New] Competency-Based Assessment](https://no-cache.hubspot.com/cta/default/3782716/interactive-146849337207.png)