Why feedback and assessment is so hard to do well at scale

Feedback and assessment shape learning quality, student confidence, and trust in a degree. But as institutions scale, what works in one classroom often breaks across programs. This article explains why it happens, where the breakdowns come from, and what it takes to make feedback and assessment consistent, fair, and sustainable at scale.

.png)

Why feedback and assessment Is so hard to do well at scale

Feedback and assessment in higher education shape everything that matters: learning outcomes, student confidence, teaching quality, and the credibility of a degree.

When it works well, students know what “good” looks like and how to improve. Educators can guide learning with clarity. Institutions can demonstrate quality and fairness across programs.

But as universities grow, something frustrating happens:

Feedback and assessment become harder to do well, not because educators care less, but because the work stops scaling.

To understand why assessment and feedback at scale break down, we need to look beyond individual teaching practice and focus on what’s happening at the institutional level.

Because this is not a teaching skills issue. It’s a scalability issue.

Feedback and assessment are not just practices. They are workflows.

On paper, assessment looks simple: students submit work, educators evaluate it, students receive feedback, learning improves.

In reality, effective feedback is not just comments at the end. It works best when students understand expectations early, practise, receive input, and improve over time. This aligns with widely adopted principles of good feedback practice in higher education, including the idea that feedback should be usable, timely, and connected to improvement (Nicol & Macfarlane-Dick, 2006).

That’s why feedback and assessment in higher education are deeply connected to learning design. When outcomes, tasks, and criteria align, feedback becomes useful, timely, and actionable.

But as soon as you scale beyond a single course, alignment becomes harder to maintain. Different modules interpret criteria differently. Different educators structure tasks in different ways. Different departments adopt different tools and processes.

And what should feel consistent across a program starts to feel uneven.

That’s the point where feedback and assessment stop being only pedagogy and become something else: an institutional workflow challenge.

The scalability gap: when good practice doesn’t travel

In a class of 30, an educator can often make things work through experience and effort.

They can clarify expectations in person, adjust criteria informally, and keep marking consistent through quick coordination.

But in a program with hundreds of students and multiple teaching teams, that flexibility becomes a risk.

Because at scale, small inconsistencies turn into big outcomes:

- Students receive mixed messages about what matters

- Criteria are applied differently across markers

- Moderation becomes harder to coordinate

- Staff spend more time aligning and troubleshooting

- Confidence in fairness starts to drop

This is why scalable assessment in higher education depends on more than good intentions.

It depends on whether the institution has the structure to support consistent execution.

Where fragmentation creates breakdowns

Most universities don’t design assessment ecosystems from scratch.

They build them gradually: a submission tool here, a rubric template there, a peer review add on for one department, spreadsheets for moderation, email threads for extensions.

Each decision makes sense locally. But together, they create fragmentation.

And fragmentation creates predictable breakdowns.

1) Students don’t get consistency

When feedback lives across multiple tools and formats, students struggle to understand expectations across modules.

They might get a rubric in one course but not another. Peer feedback in one assignment but only a grade in the next. Comments in the LMS here, PDFs there.

Over time, students stop focusing on learning and start focusing on decoding the system.

This is one reason why many institutions are revisiting their assessment and feedback strategy and governance models, not just individual course design.

2) Educators rebuild the same structures again and again

When there is no shared workflow, every course becomes a fresh setup.

Rubrics get recreated. Instructions get rewritten. Peer review needs to be re explained. Criteria drift happens quietly across teaching teams.

This is a major driver of faculty assessment workload, especially in large programs where coordination is already complex. A practical way to reduce that repeat work is to reuse proven rubric structures and adapt them across courses, for example with this assessment rubrics template collection.

If you want a practical view of what this looks like in real teaching contexts, explore how institutions reduce workload through structured workflows in the FeedbackFruits success stories.

3) Leaders lose visibility

Fragmentation also makes it difficult to answer institutional questions like:

- Are we applying standards consistently across cohorts

- Are students receiving timely feedback

- Where are the bottlenecks in assessment delivery

- Which courses struggle most with workload and moderation

Without visibility, improving quality becomes harder to manage across programs.

This is where consistency and fairness in assessment start to suffer, even when everyone is trying their best.

The real workload problem starts before grading

When people talk about assessment workload, they often mean marking time.

But in practice, the pressure often begins long before grading starts.

In large scale teaching, the most time consuming work is often the coordination:

- building and calibrating rubrics across markers

- clarifying expectations for diverse cohorts

- managing extensions, resits, and exceptions

- aligning tasks with outcomes and quality assurance

- answering repeated questions about criteria and process

These tasks don’t scale neatly with student numbers. They multiply.

That’s why institutions trying to improve their assessment and feedback strategy are asking a different question:

How do we reduce manual coordination without lowering quality?

A big part of the answer is moving from scattered processes to structured workflows supported inside the LMS.

This is where an LMS integrated assessment tool and LMS integrated feedback tool approach becomes so valuable: it reduces context switching and makes quality easier to repeat across courses and teams.

If you want a deeper breakdown of what a modern platform includes (and what to look for), you may also like: What Is a Feedback and Assessment Solution

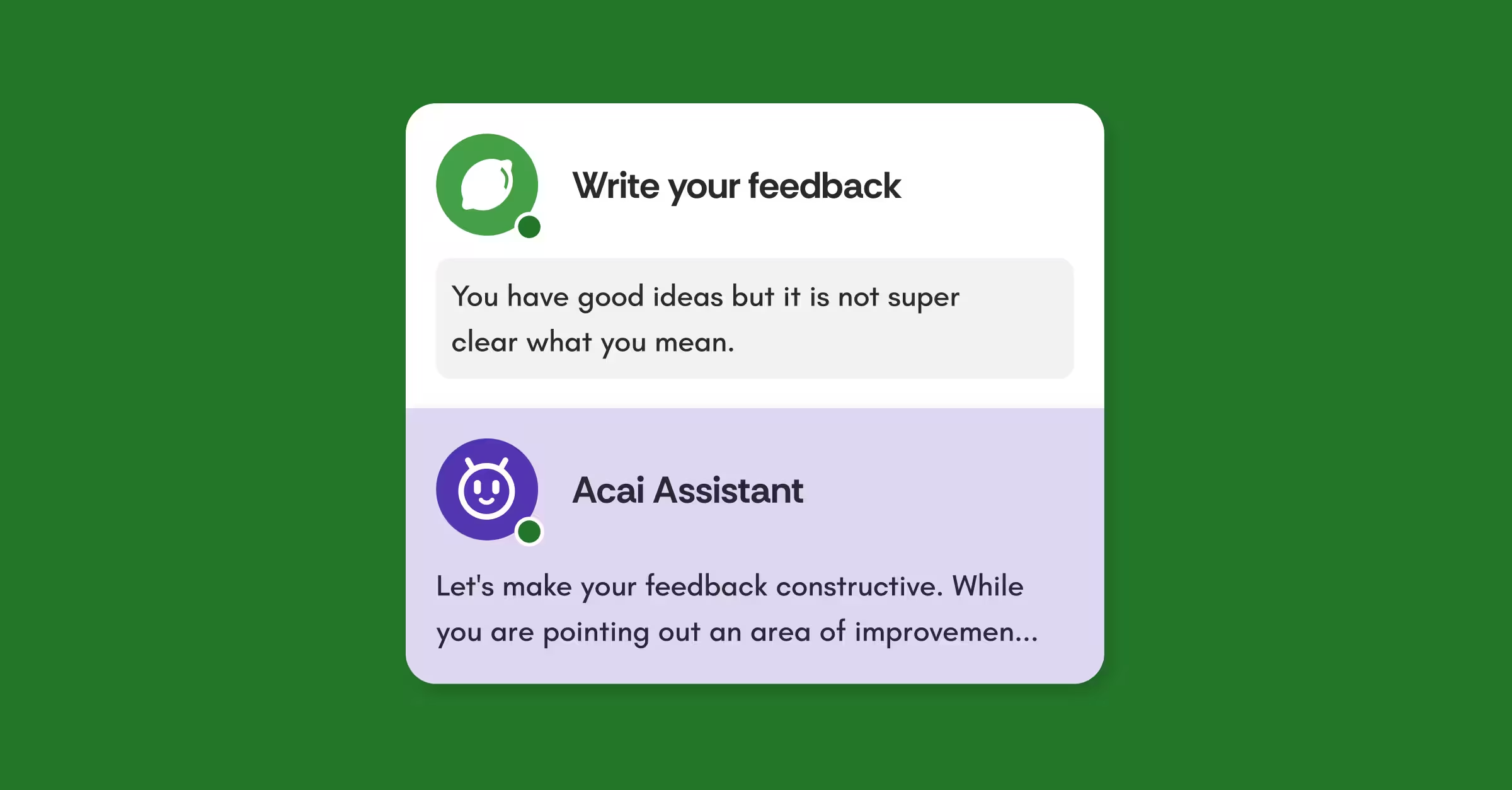

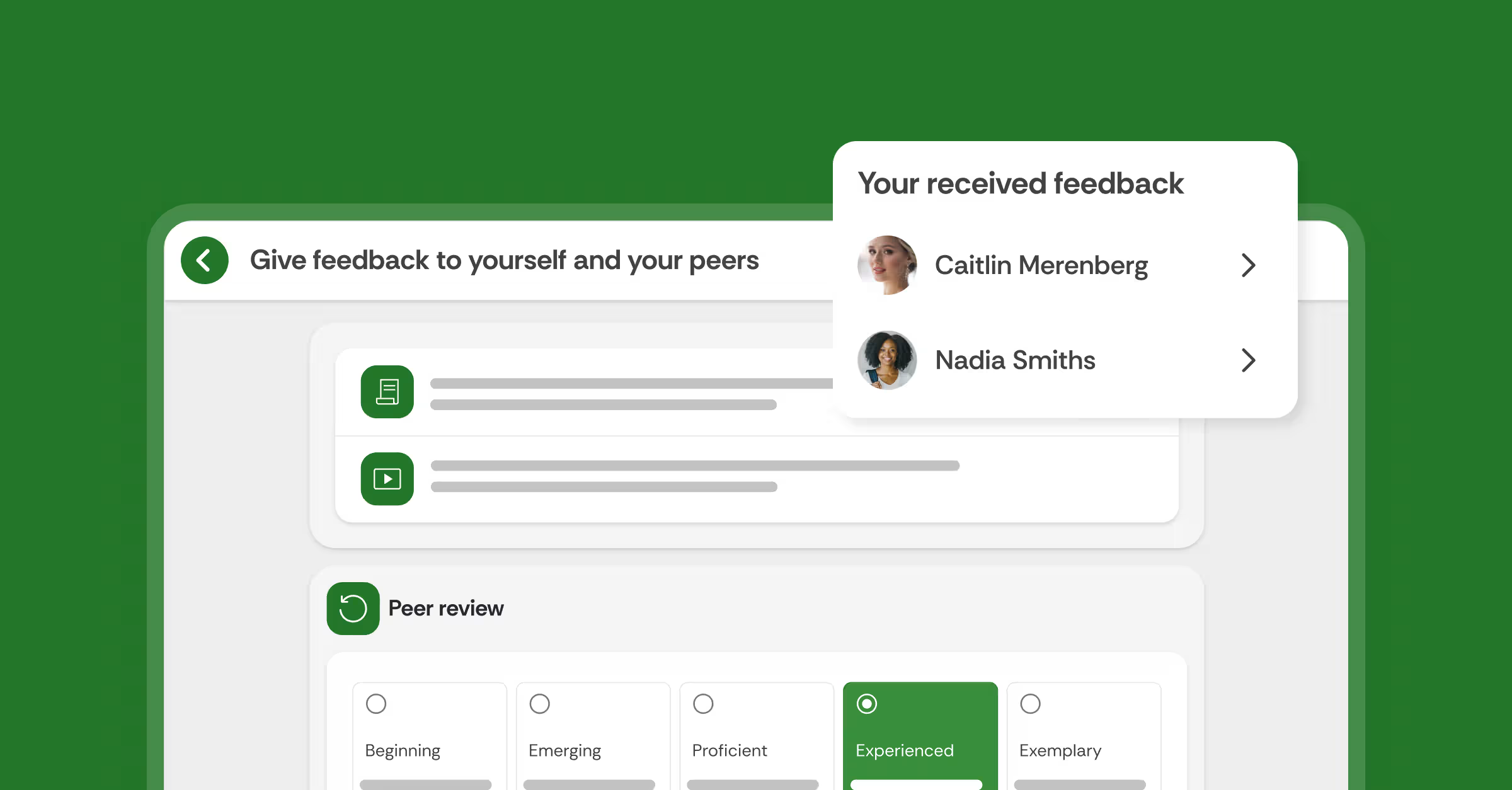

Peer review can scale feedback, but only when it’s designed properly

It makes sense that more institutions are investing in peer review.

Done well, peer review helps students practise applying criteria, build evaluative judgement, and engage with feedback as a learning skill, not a one time event. This aligns with research showing that peer assessment supports learning when criteria and guidance are clear (Topping, 1998).

In other words, it supports learning, not just grading.

But peer review only works at scale when it has the right foundations.

Without clear criteria and structure, it can quickly turn into vague comments, uneven quality, student frustration, and extra workload for educators who have to “fix” the process afterwards.

Peer review becomes a scalable lever when it is part of a repeatable workflow: clear rubrics, structured prompts, and a process students understand from the start.

That’s the difference between a one off activity and a sustainable approach to assessment and feedback at scale.

To see what structured peer review looks like in practice, explore ready to use activities and examples in the FeedbackFruits template library.

New pressures are making scalability even harder

Higher education is changing fast. Three trends are making scalable feedback and assessment more urgent:

Hybrid and online learning

Flexible delivery models demand workflows that work across synchronous and asynchronous teaching, across campuses, and across different student contexts. Many institutions are navigating these challenges alongside broader digital transformation agendas (EDUCAUSE).

AI in assessment

Generative AI has created both opportunity and uncertainty. Institutions need ways to maintain trust and standards while supporting educators without increasing workload. UNESCO’s guidance on AI in education highlights both the potential and the need for responsible governance (UNESCO).

For a practical perspective on how to approach this shift, watch our webinar replay: Process over output: Using feedback to measure learning

Authentic and competency based assessment

More programs are moving toward real world tasks, teamwork, reflection, and skill demonstration. These approaches need richer feedback loops, which are difficult to sustain through manual processes alone.

Together, these trends raise the stakes.

Because the question is no longer just “how do we give feedback?”

It becomes: How do we deliver high quality feedback and assessment consistently across programs, cohorts, and teaching teams without burning people out?

What changes when institutions design for scale

Institutions that succeed tend to make one key shift:

They stop relying on individual effort to hold the system together.

Instead, they design feedback and assessment as a shared capability.

When structured workflows are supported inside the LMS and aligned across teaching teams, three things improve quickly:

- Educators spend less time rebuilding and coordinating, and more time supporting learning

- Students experience clearer expectations and more usable feedback

- Leaders gain visibility into quality and consistency, making improvement easier to manage across programs

This is how scalable assessment becomes real: not by asking people to do more, but by making good practice easier to repeat.

If you want a practical starting point, explore the guide: From feedback to impact: Your guide to scalable, authentic assessment

Conclusion: Scaling feedback and assessment is a design challenge

Feedback and assessment in higher education are too important to depend on fragmented tools, inconsistent processes, and manual coordination.

When institutions grow, the cracks are not caused by lack of care or expertise.

They appear because the infrastructure was never designed to support scale.

That’s why improving feedback and assessment is not only a pedagogical challenge.

It’s a workflow and design challenge.

Explore how institutions are scaling feedback and assessment

If your goal is to improve quality, consistency, and faculty assessment workload across programs, the next step is not asking educators to work harder.

It’s creating a workflow that supports excellent practice at scale.

Explore how institutions are scaling feedback and assessment with the Feedback and Assessment solution.

![[New] Competency-Based Assessment](https://no-cache.hubspot.com/cta/default/3782716/interactive-146849337207.png)