Practical activities to leverage AI for engagement and skills development

Without doubt, the flourish of AI generative tools like ChatGPT is has sent institutions into whirlwind, with concerns over academic integrity, plagiarism, depletion of students’ real life skills, and more. However, according to Dr. John Fitzgibbon – Associate Director for Digital Learning Innovation at Boston College, these issues are the least institutions should be worried about:

“If we're worried about tools and technology taking over and defeating us, that's the wrong way to think about it. We need to think about humans and technology working together. So we've got to figure out how to work with AI technology.”

As AI will be here to stay and continue play a crucial role in every aspect of our lives, thorough understanding and proper skills to utilize this technology are of great importance. Instead of considering AI as a threat, faculties need to explore the potential of AI and help students navigate through this technology.

This, however, is easier said and done. How exactly can institutions work with AI and integrate it into the learning process? Faculties are in dire need of best strategies, as well as examples of AI implementation.

That’s why in this article, we compile several examples of embracing AI to enhance student engagement and nurture lifelong skills, shared by Dr. John Fitzgibbon, Associate Director for Digital Learning Innovation in CDIL at Boston College and Nathan Riedel – Instructional Technologist at Fort Hays State University in a webinar on embracing AI.

Activities to enhance student understanding of AI

For Dr. Fitzgibbon, before designing any activity that involves AI, faculties develop a firm grasp of the technology by:

- Exploring: Try using AI tools for your own work and life, such as course content development, emails writing, and more. Also engage in discussion with colleagues or reach out to different support departments of your institutions to gain more knowledge of AI developments.

- Identifying: Based on the self-exploration and discussion, you can analyze and detect the opportunities and limitations of AI.

- Reflecting: Once you are clear about the pros and cons, ask yourself these questions: “How can I leverage what is good about AI?”, “How can I make students aware of what is bad about AI and practice overcoming it?”. Finding answers to these will help you in planning effective activities.

Following these 3 principles, Dr. Fitzgibbon was able to come up with several learning activities to help students discover AI technology.

Activity 1: Students identify benefits and limitations of AI tools

For this activity, Dr. Fitzgibbon generated 4 answer options to each of his mid-term test questions (one of which was written by ChatGPT) and asked students to decide on the best answer choice.

The instructors then encouraged students to explain there choice: Why do they think the answer is the best? and What makes this a sufficient answer?

If students choose the AI-generated option, Dr. Fitzgibbon would point out the limitations within these responses such as referencing or false information even though they seem to be perfectly crafted.

Doing this helps students to understand the capacity of AI generative tools: their potential and also restrictions.

Activity 2: Students critically evaluate AI-generated answers

Together with a faculty member in the finance department, Dr. Fitzgibbon utilized AI generative tools train critical analysis and evaluative skills.

For a mid-term exam on bonds and different financial products, one of the questions asks students to calculate the bonds value: “How much does the principal of a bond (the $1000) to be received upon a bond’s maturity in 5 years add to the bond’s current price if the appropriate discount rate is 6%?”

However, this question failed to address students’ higher-order thinking skills and can be easily answered by ChatGPT. Dr. Fitzgibbon noted:

“So what are we really looking for in this question? Do you want your students to thoroughly understand bond, you just want them to calculate bonds?”

That’s why they decided to change the question into a critical analysis one, in which students needed to critically reflect on an AI-generated answer regarding the impact of Bonds. Below is how the modified question:

Think for a moment what the impact would be upon that particular company (from the questions above). If Congress, in their infinite wisdom, decided to ban Bonds entirely. Let’s stipulate that the company has been using Bonds for some years to fund operations, working capital, and growth.

I asked ChatGPT this question for a Tire Manufacturer, here is their answer:

For this question, please critique the ChatGPT statement, telling me what ChatGPT got right and what it got wrong here, and what important issue(s) did ChatGPT miss?

Be very specific, unlike ChatGPT, I’m looking for answers that give examples about particular companies, including drawing directly from our course readings and discussion.

Activities to develop critical evaluation and feedback skills

For instructional technologist Nathan Riedel, nurturing a growth mindset and lifelong skills, especially in the age of AI has become the core mission of higher education.

“What is important is to encourage a growth mindset. This is about learning. Mistakes are okay.”

That’s why Nathan came up with several learning activities to help students develop not only critical reflection of AI-generated content, but also feedback and collaboration skills. He also utilized several FeedbackFruits solutions to support the implementation of these activities in

Activity 1: Critical analysis of ChatGPT-generated content using technology

For this activity, students needed to come up with a number of questions and use ChatGPT to generate the answers, which were then uploaded to FeedbackFruits Assignment Review. Within this tool, the instructor closely analyzed the AI-generate content, annotated important sections and provided comments, feedback, questions, even gave suggestions on how to curate the prompts for AI. This process can help students to identify the “deficiencies in AI-generation information, as well as encourage them to go out and look for these holes, or what is lack in this language model”, as stated by Nathan.

Some of the questions that students used in this activity are:

- Can you explain and provide an example of Regular Substantive Interaction/ Collaborative learning?

- Can you suggest a free alternative to Adobe Photoshop?

According to Nathan, this activity encourages students to actively and critically regard the AI content and detect the existing flaws.

“By asking students to thoroughly analyze ChatGPT content, they can find the deficiencies. In other words, you’re encouraging your students to go out and look for these holes. They can find the knowledge that is lacking in the language model.”

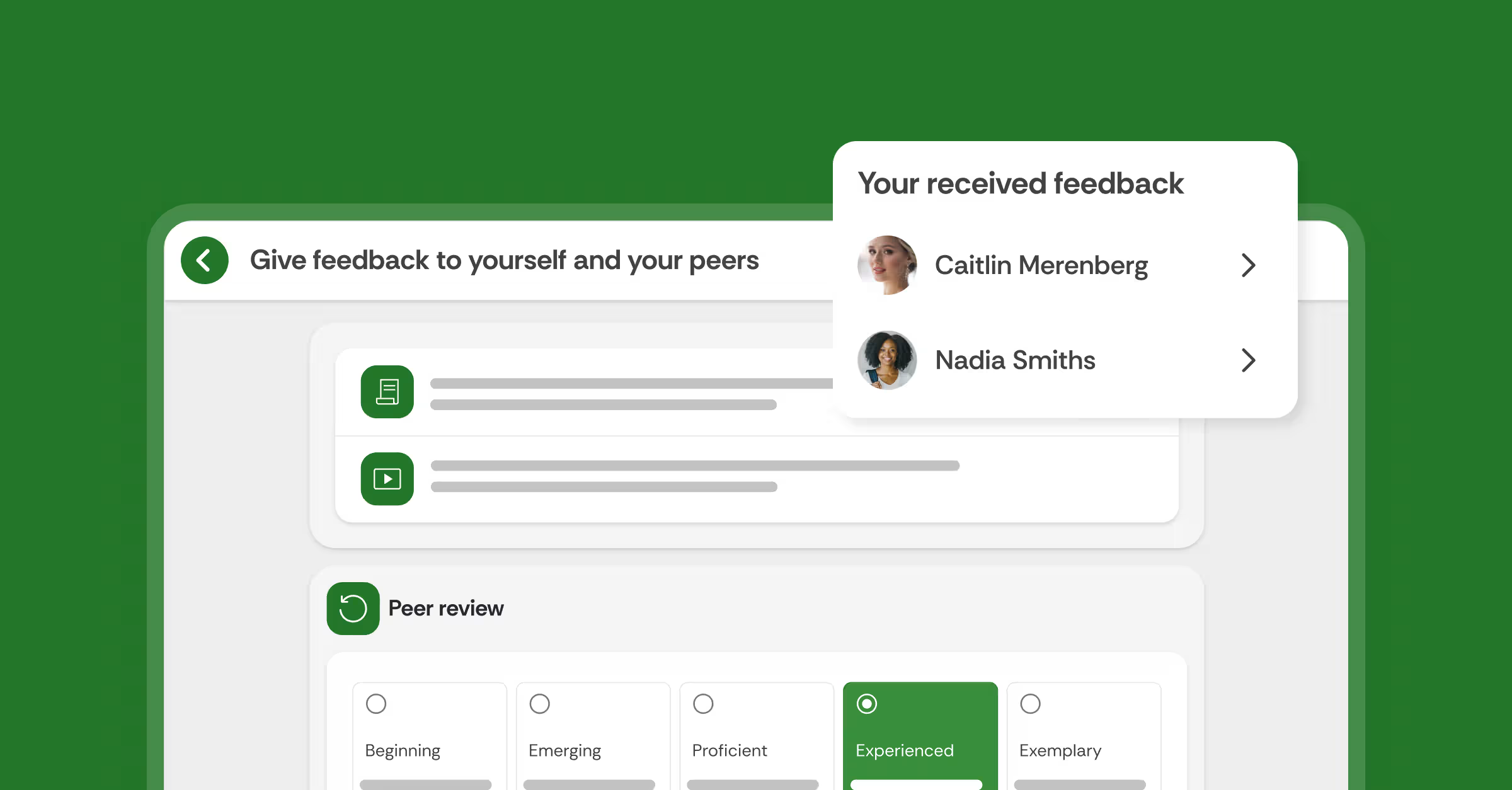

Activity 2: Peer review AI-generated content

The same critical analysis activity can be upgraded into a peer and group assessment component, in which students upload their ChatGPT-generated answers and give feedback on each other’s submissions.

This activity update would normally take up plenty of time for assigning peer reviewers or group members, developing feedback rubric criteria, and such. However, Nathan opted for FeedbackFruits Peer Review to streamline the entire review process.

Within the tool, students easily uploaded their AI content, get assigned to another peer’s work to review based on a set of criteria. They can also annotate the submission and add questions or discussion points for further exchange.

Not only does this activity encourage critical reflection of AI content, but is also create plenty of feedback opportunities and interaction moments where students can develop social and collaborative learning.

Activity 3: Self-reflection of AI content

Besides peer and group feedback, self-reflection constitutes a critical part of feedback skills. That’s why Nathan suggested adding another layer to the critical analysis activity, which is self evaluation of AI-generated content. For this he relied on FeedbackFruits Self Assessment. With this tool, students upload their ChatGPT-generated transcripts and critically analyze this based on a set of criteria. At the same time, instructors can access students’ progress, give comments on the analysis and provide timely support. This activity, according to Nathan, stimulates self-regulatory skills and teacher-student interactions, while “encouraging students to think carefully about their interactions with AI”.

Activity 4: Utilize AI-powered feedback

Effective, quality feedback needs to be continuous, growth-oriented, and personalized. However, achieving this can be quite challenging, especially within a large student cohort. This is where AI can be leveraged to support instructors in the feedback delivery process, and FeedbackFruits Automated Feedback is developed to fulfil this role. Powered by AI, this LMS plug-in scans through students writing and provides instant, formative feedback on structural writing elements like citation, academic style, grammar, and structure, which then leave teachers with more time to address higher-order cognitive skills like comprehension and critical argumentation. Based on these feedback, students can iterate and produce a better final work.

For Nathan, he used to tool to let students receive automated feedback on their ChatGPT transcripts regarding a set of pre-selected writing criteria (grammar, personal pronouns, reference, literature review, content and structure, etc.). Students are also encouraged to decide on which feedback to follow, by indicating whether the comment is helpful or not.

Use AI to guide students in giving feedback

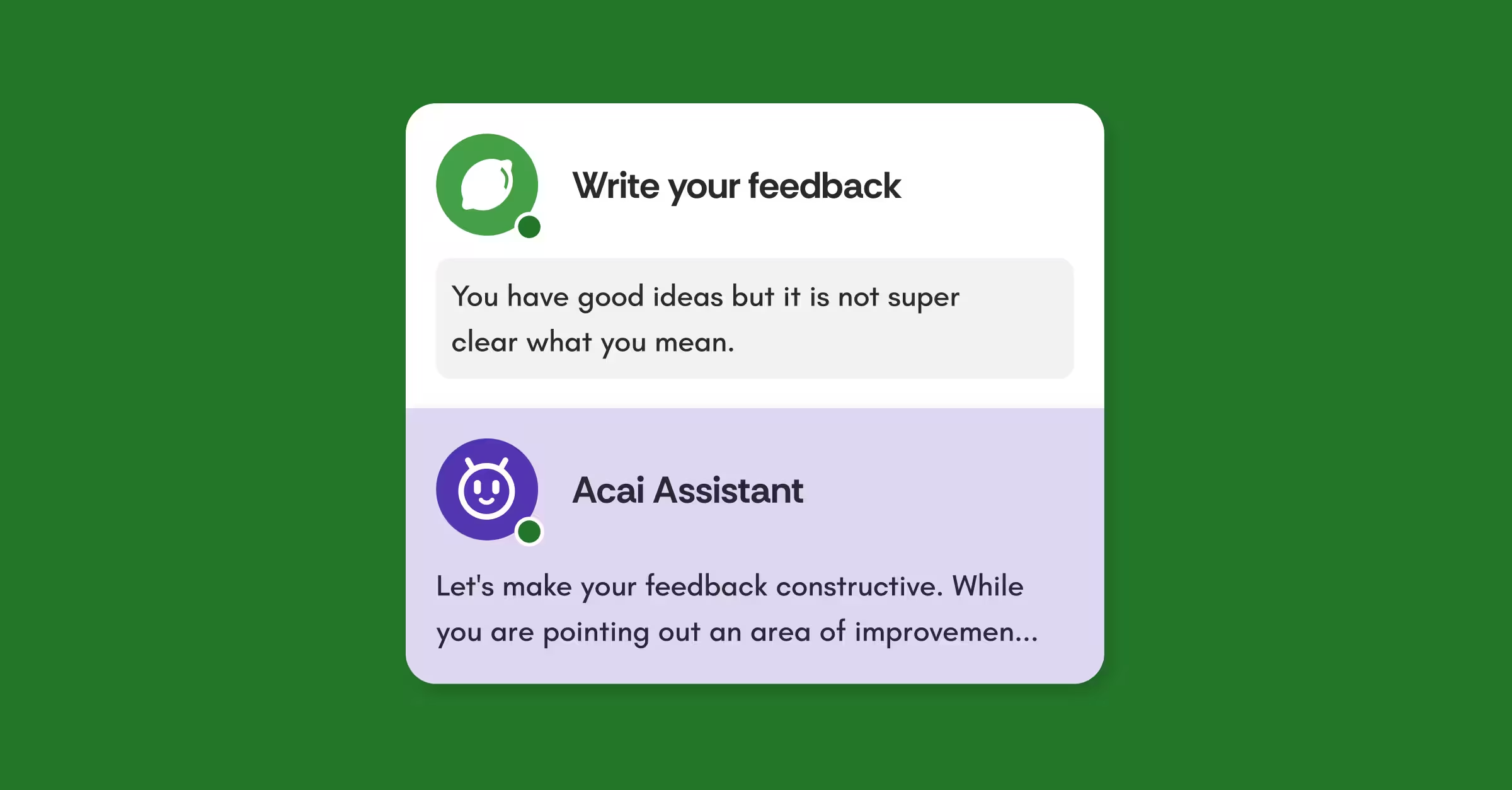

Besides generating personalized feedback on academic writing, FeedbackFruits also harnessed AI power to guide students in delivering better feedback. The Automated Feedback Coach was introduced as an assistant to help students provide quality feedback during the peer or group assessment activity.

.avif)

An important part of the feedback process is letting students know how to deliver good feedback. In fact, students’ feedback often falls into either too short, too positive or negative spectrum. This is due to lack of guidance and also unwillingness to complete the activity. It is also challenging for instructors to follow, and provide instruction for each student in their feedback delivery process. That's why we introduced the Automated Feedback Coach to assist educators in guiding students to deliver better feedback.

The first beta version was available as a plugin of the Group Member Evaluation tool. Instructors can activate this feature to automatically generate real time suggestions when students’ feedback are: too short, too general, sounds like a personal attack, overly positive or negative, or overlapped with other given feedback. The AI plug-in also identifies and praises students for quality feedback comments.

This year, we have further developed the Automated feedback coach to provide better real-tip feedback tips and help students nurture feedback skills and deliver higher quality comments.

The new version – Automated Feedback Coach 2.0 uses advanced Large Language Models provided by Azure OpenAI Service to process students’ input and provide feedback. We choose to use Azure’s OpenAI Service to deliver this feature because of its enterprise-level security, compliance, and regional availability.

Compared to version 1.0, it is much faster, more scalable, and able to provide more specific, actionable feedback based on the reviews context.

For more information on Automated Feedback Coach 2.0, check out this article.

Further resources

For more ideas and strategies on utilizing AI tools, you can check the following resources:

- FbF AI resources hub: A collection of resources on AI including articles, use cases, tools, and more that will help you and your faculty embrace AI technology (such as ChatGPT) in every teaching and learning aspect: from course design, assessment, technology adoption, to policy making.

- Authentic assessment learning journey: A 7-step framework on how to design and facilitate authentic assessment activities that encourage growth mindset and nurture lifelong skills.

- ChatGPT: How to adapt your courses for AI?: Suggestions on how institutions can adapt your courses to embrace the rise of ChatGPT and AI technology

![[New] Competency-Based Assessment](https://no-cache.hubspot.com/cta/default/3782716/interactive-146849337207.png)